Installing LeoFS

LeoFS Install Guide

We are setting up 2 LeoFS zones with the following ip addresses and services. The FiFo Zone will be created separately by following the regular installation guide - but is included in the table for clarity. For the purposes of this guide we will assume you only have 1 hypervisor (physical server). For production use, the recommended setup is a distributed LeoFS cluster - deployed on 5 separate servers across 5 different zones.

Please consult Zone requirements for details on the dataset.

LeoFS utilises 'Layer 2' broadcast traffic for communication. As such, your LeoFS zones need to be on the same Layer 2 network or vlan in order to function.

Our setup will be as follows:

| Zone Name | IP Address | Services | Hypervisor |

|---|---|---|---|

| LeoFS Zone 1 | 10.1.1.21 | Manager0, Gateway0, Storage0 | 1 |

| LeoFS Zone 2 | 10.1.1.22 | Manager1 | 1 (2 if it exists) |

| FiFo Zone | 10.1.1.23 | Sniffle, Snarl, Howl, Wiggle, Jingles | 1 |

The storage.s3.host will always be the ip address of the zone where the “Gateway” service resides.

We will also need to generate a random cookie to be used as part of the setup.

[root@1.leofs ~]# openssl rand -base64 32 | fold -w16 | head -n1

QvTnSK0vrCohKMkw

| Our Random Cookie | QvTnSK0vrCohKMkw |

DNS Setup

LeoFS provides an S3 compatible API and as such requires a resolvable Host Name or FQDN to function. Both a DNS server or adding entries to /etc/hosts files will work. Using naked IP Addresses will NOT work.

A good alternative for test systems is the internet DNS service XIP.io. It resolves hostnames in the form of: *.<ip>.xip.io to <ip>. xip.io is a special domain name that provides wildcard DNS for any IP address. In this guide we will XIP.IO but for production it is recommended that you do not rely on any third party dns service or create a dependency on an external DNS service for your Cloud storage to function.

If our LeoFS storage zone IP is 10.1.1.21 using storage.10.1.1.21.xip.io as a hostname for the storage server will resolve and return the ip address 10.1.1.21.

[root@1.leofs ~]# dig @8.8.8.8 storage.10.1.1.21.xip.io

; <<>> DiG 9.8.3-P1 <<>> @8.8.8.8 storage.10.1.1.21.xip.io

; (1 server found)

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 16337

;; flags: qr rd ra; QUERY: 1, ANSWER: 1, AUTHORITY: 0, ADDITIONAL: 0

;; QUESTION SECTION:

;storage.10.1.1.21.xip.io. IN A

;; ANSWER SECTION:

storage.10.1.1.21.xip.io. 300 IN A 10.1.1.21

;; Query time: 303 msec

;; SERVER: 8.8.8.8#53(8.8.8.8)

;; WHEN: Mon Jun 1 20:41:48 2015

;; MSG SIZE rcvd: 58

Custom DNS

If your setup uses internal DNS or host file entries - then all the LeoFS service host names should be resolvable within each LeoFS zones as well as from the Global Zone of every hypervisor.

S3 needs a DNS entry for every bucket, so if you configure the endpoint

storage.project-fifo.netand use the default buckets you will need DNS entries for:

storage.project-fifo.netfifo.storage.project-fifo.netfifo-backups.storage.project-fifo.netfifo-images.storage.project-fifo.net

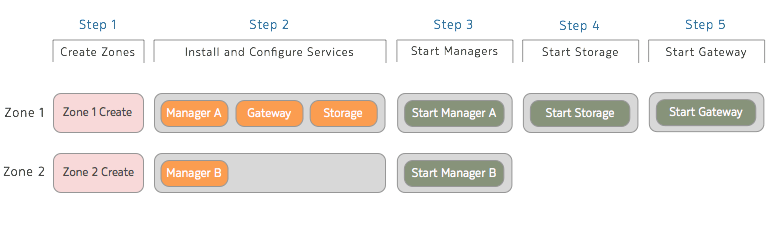

Installation Steps Overview

(Step 1) Create Zones

From the GZ (Global Zone) we install the base dataset which we will use for our LeoFS Zones. Then we have to confirm it is installed:

imgadm update

imgadm import e1faace4-e19b-11e5-928b-83849e2fd94a

imgadm list | grep e1faace4-e19b-11e5-928b-83849e2fd94a

If installed successfully you should see:

e1faace4-e19b-11e5-928b-83849e2fd94a minimal-64-lts 15.4.1 smartos zone-dataset 2016-03-03

Sample contents of leo-zone1.json

{

"autoboot": true,

"brand": "joyent",

"image_uuid": "e1faace4-e19b-11e5-928b-83849e2fd94a",

"delegate_dataset": true,

"max_physical_memory": 3072,

"cpu_cap": 100,

"alias": "1.leofs",

"quota": "80",

"resolvers": [

"8.8.8.8",

"8.8.4.4"

],

"nics": [

{

"interface": "net0",

"nic_tag": "admin",

"ip": "10.1.1.21",

"gateway": "10.1.1.1",

"netmask": "255.255.255.0"

}

]

}

Sample contents of leo-zone2.json

{

"autoboot": true,

"brand": "joyent",

"image_uuid": "e1faace4-e19b-11e5-928b-83849e2fd94a",

"delegate_dataset": true,

"max_physical_memory": 512,

"cpu_cap": 100,

"alias": "2.leofs",

"quota": "20",

"resolvers": [

"8.8.8.8",

"8.8.4.4"

],

"nics": [

{

"interface": "net0",

"nic_tag": "admin",

"ip": "10.1.1.22",

"gateway": "10.1.1.1",

"netmask": "255.255.255.0"

}

]

}

Next we create our LeoFS JSON payload files and create our 2 LeoFS zones.

cd /opt

vi leo-zone1.json

vi leo-zone2.json

vmadm create -f leo-zone1.json

vmadm create -f leo-zone2.json

The rest of the setup will be done within our newly created LeoFS zones.

(Step 2.1) Zone 1 Configuration

We zlogin to the LeoFS Zone 1 and add the FiFo package repository then install the LeoFS “Manager”, “Gateway” and “Storage” services.

zlogin <leo-zone1-uuid>

zfs set mountpoint=/data zones/$(zonename)/data

cd /data

curl -O https://project-fifo.net/fifo.gpg

gpg --primary-keyring /opt/local/etc/gnupg/pkgsrc.gpg --import < fifo.gpg

gpg --keyring /opt/local/etc/gnupg/pkgsrc.gpg --fingerprint

VERSION=rel

cp /opt/local/etc/pkgin/repositories.conf /opt/local/etc/pkgin/repositories.conf.original

echo "http://release.project-fifo.net/pkg/15.4.1/${VERSION}" >> /opt/local/etc/pkgin/repositories.conf

pkgin -fy up

pkgin install leo_manager leo_gateway leo_storage

Warning

LeoFS uses

Replicasto ensure a certain consistency level for your data. Once the replica value has been set and your cluster started, it can NOT be changed. You can still add storage nodes to the cluster but your resiliency level will always remain constant.

Next we configure all the services in Zone 1 by editing each respective configuration file and changing ONLY the following settings - the rest of the config options remain unchanged :

leo_manager.conf

vi /data/leo_manager/etc/leo_manager.conf

nodename = manager0@10.1.1.21

manager.mode = master

distributed_cookie = QvTnSK0vrCohKMkw

manager.partner = manager1@10.1.1.22

consistency.num_of_replicas = 1

consistency.write = 1

consistency.read = 1

consistency.delete = 1

leo_gateway.conf

vi /data/leo_gateway/etc/leo_gateway.conf

distributed_cookie = QvTnSK0vrCohKMkw

managers = [manager0@10.1.1.21, manager1@10.1.1.22]

http.port = 80

http.ssl_port = 443

Tuning Large Object Sizes

The default value of

large_object.max_chunked_objsin theleo_gateway.conffile is set to 1000 or 5.24GB. Please adjust yours accordingly e.g. If you plan to backup a 100GB Machine, 5.24GB will not cut it. The way you work this out is as follows: 1000 xlarge_object.chunked_obj_len(Byte) or 1000 x 5242880 Bytes = 5.24GB

leo_storage.conf

vi /data/leo_storage/etc/leo_storage.conf

distributed_cookie = QvTnSK0vrCohKMkw

managers = [manager0@10.1.1.21, manager1@10.1.1.22]

(Step 2.2) Zone 2 Configuration

We now zlogin to the LeoFS Zone 2 and add the FiFo package repository then install the LeoFS “Manager” service.

zlogin <leo-zone1-uuid>

zfs set mountpoint=/data zones/$(zonename)/data

cd /data

curl -O https://project-fifo.net/fifo.gpg

gpg --primary-keyring /opt/local/etc/gnupg/pkgsrc.gpg --import < fifo.gpg

gpg --keyring /opt/local/etc/gnupg/pkgsrc.gpg --fingerprint

VERSION=rel

cp /opt/local/etc/pkgin/repositories.conf /opt/local/etc/pkgin/repositories.conf.original

echo "http://release.project-fifo.net/pkg/15.4.1/${VERSION}" >> /opt/local/etc/pkgin/repositories.conf

pkgin -fy up

pkgin install leo_manager

leo_manager.conf

vi /data/leo_manager/etc/leo_manager.conf

nodename = manager1@10.1.1.22

manager.mode = slave

distributed_cookie = QvTnSK0vrCohKMkw

manager.partner = manager0@10.1.1.21

consistency.num_of_replicas = 1

consistency.write = 1

consistency.read = 1

consistency.delete = 1

(Step 3.1) Start Manager A

Zlogin to Zone 1 and enable the following services.

svcadm enable epmd

svcadm enable leofs/manager

(Step 3.2) Start Manager B

Zlogin to Zone 2 and enable the following services.

svcadm enable epmd

svcadm enable leofs/manager

Please be aware that the startup order is very important and that the leofs-adm status commands should show the service is up on BOTH zones before you continue.

leofs-adm status shall display a section named [System Configuration] with consistency level.

(Step 4) Start Storage

Zlogin to Zone 1 and enable the LeoFS Storage service and confirm it is running.

svcadm enable leofs/storage

Confirm that when running leofs-adm status the storage is listed. Once confirmed you then start the storage with the leofs-adm start command.

leofs-adm start

(Step 5) Start the Gateway

Zlogin to Zone 1 and enable the LeoFS Gateway service and confirm everything is running correctly. It may take a couple of seconds for the gateway to attach itself.

svcadm enable leofs/gateway

leofs-adm status

This part of the LeoFS setup is now complete, the final step as shown below is completed after your return to the FiFo installation manual and continue with the rest of your FiFo setup.

Starting the LeoFS Cluster

The last step will be completed in the actual FiFo Zone once you have it up and running. You should now continue with the general FiFo Installation manual.

Once FiFO is configured the sniffle-admin init-leofs command should be used from within your FiFo zone. It will set up the required, users, buckets and endpoints.

sniffle-admin init-leofs 10.1.1.21.xip.io

Security Note

By default, LeoFS opens some ports for remote configuration. This gives the FiFo 'init-leofs' command the ability to configure LeoFS remotely. Once your installation has completed, please consider closing the ports to further secure your environment. E.g. using the firewall feature.

Updated less than a minute ago